Application concept of QNN technology in football prediction

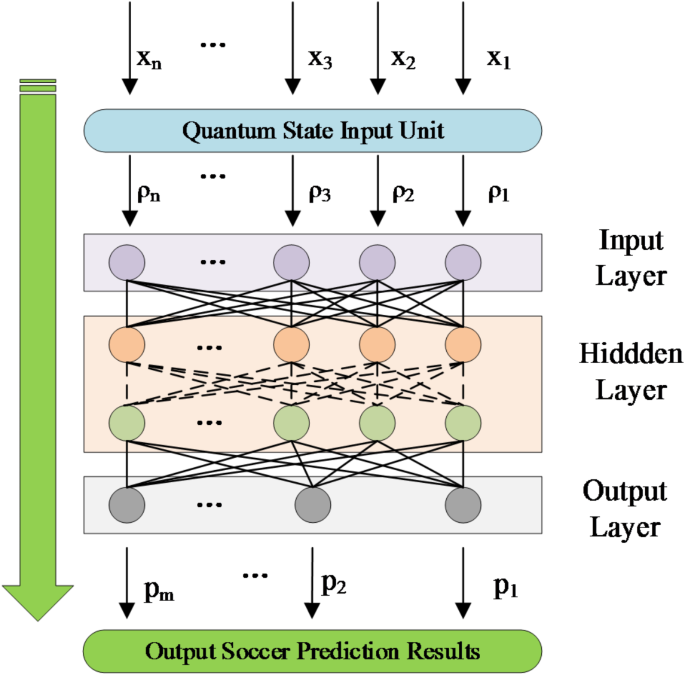

In today’s highly digital and data-driven era, accurate predictions of football match outcomes have been a prominent research area. The emergence of QNN technology presents a new opportunity to solve this complex challenge14. Football match outcomes are influenced by multiple interrelated and dynamically changing factors, including team strength, player condition and ability, tactical arrangements, home and away conditions, and match environment. Traditional prediction methods are limited in capturing the complicated relationships between these factors accurately. The key advantage of QNN technology lies in its ability to process massive high-dimensional data and, through the unique properties of quantum states, uncover hidden patterns and nonlinear correlations within the data. It is applied to football prediction and can deeply analyze the combined effects of various factors on match outcomes15. For instance, by learning from massive historical match data, it can discern performance patterns of different teams under specific tactics and the nuances of player coordination. It can also keenly detect trends in player condition changes, thereby predicting their future performance more accurately16. Moreover, it can incorporate seemingly minor but potentially crucial factors like pitch conditions and weather into the model, constructing a comprehensive and detailed prediction framework that continuously self-optimizes to adapt to new data17. The QNN technology’s application concept in football prediction is presented in Fig. 1.

Application concept of QNN technology in football prediction.

In Fig. 1, the QNN model architecture constructed in this study presents a complex system that integrates quantum mechanics concepts with neural network architecture. It aims to leverage the quantum states’ superposition and entanglement properties to handle the multidimensional and dynamically changing complex information involved in football match prediction18. From a network hierarchy perspective, the model consists of the input, hidden, and output layers, with each layer working in close collaboration to drive the processing and transfer of information. The input layer serves as the data entry point, responsible for receiving preprocessed raw data sourced from various key aspects of the football match. These data encompass recent team goals, conceded goals, player fitness data, recent match performance scores, and environmental data from the match venue19. When converting these classical data into quantum states for input into the model, a quantum computing-based encoding method is employed, assigning appropriate quantum state symbols to each input data. This transformation process is completed within the “Quantum State Input Module”. Classical data is quantized through specific quantum gate operations and qubit representations, allowing the data to enter the model in quantum state form and participate in subsequent quantum computing processes20. The hidden layers play a critical role in feature extraction and the modeling of complex relationships. The architecture includes multiple layers to enhance the model’s expressiveness and learning depth. Neurons within the hidden layers interact through weight connections, encompassing traditional scalar weights and quantum-state correlations introduced by quantum operations. Solid lines represent conventional neuron connections, with weights optimized through classical training algorithms, such as gradient descent, to adjust the signal strength between neurons, thereby learning the linear relationships within the data. Dashed lines represent entanglement relationships between quantum states, realized through quantum gate operations, allowing qubits to form non-local associations and capture complex nonlinear relationships within the data. This is crucial for modeling intricate scenarios in football match prediction, such as the subtle interactions between team tactics and player performance, or the potential impact of players’ psychological states on the progression of the match21.

The number of neurons in the hidden layers is dynamically optimized based on the size and complexity of the dataset. Moreover, it is fine-tuned during training, ensuring that the model can fully extract the latent features and patterns from the data while avoiding overfitting. Additionally, to facilitate differentiation and understanding of the various computational layers’ functions, color coding is used (e.g., purple, orange, green, grey), where each color of a neuron may handle different types of information. For instance, purple neurons focus on processing team tactical information, while orange neurons focus on individual player status. However, the specific functional assignments are adaptively adjusted according to the model’s training and optimization process. The output layer is responsible for generating the final prediction results. In the context of football match prediction, the output may include predictions for match outcomes (win, loss, or draw), goals scored, and goals conceded. These output results are closely linked to the quantum state output from the hidden layers. The quantum states are converted into interpretable classical prediction values through specific quantum measurement operations and post-processing steps. For example, in predicting match outcomes (win, loss, draw), the probability distribution of quantum states is sampled and statistically analyzed. The final match result prediction can be derived by combining it with the decision rules learned during model training. For goal and conceded goal predictions, relevant numerical information is extracted from the quantum states. Meanwhile, meaningful and accurate predicted values are obtained through reverse quantum encoding and data scaling operations. The entire model follows a feedforward mechanism, where information is processed and transformed sequentially through each layer without feedback loops. This ensures the model’s computational efficiency and stability and facilitates the control and optimization of the training process. Through such an architectural design and information processing flow, the QNN model can fully leverage the characteristics of quantum mechanics to efficiently process and analyze complex information in football matches. Thus, it can achieve more accurate and reliable predictions compared to traditional models. This provides an innovative and effective technical approach for football match prediction, with significant theoretical research value and practical application potential.

Technical analysis of the QNN

As an innovative model that combines the advantages of quantum computing and neural networks, the QNN demonstrates unique effectiveness in football match prediction tasks, effectively addressing many of the challenges faced by traditional methods. Quantum computing, based on qubits and the principles of quantum superposition and entanglement, provides significant computational advantages, laying a solid foundation for QNNs in handling complex data. In the context of the complex, multi-dimensional data involved in football prediction, QNNs leverage the superposition property of quantum states. This allows multiple data points to exist simultaneously in different states and cover a wide range of potential possibilities. For instance, when analyzing team tactical strategies, the outcomes of different tactical arrangements can exist as superposition states within the quantum system. Combined with the entanglement property of quantum states, the model can tightly link factors such as player status and match environment. For example, a player’s physical condition, mental state, and factors related to the match—such as weather conditions and home-court atmosphere—can mutually influence each other through quantum entanglement. This means that small changes in one factor can instantaneously affect other factors via entanglement. This superposition and entanglement of quantum states enable QNNs to capture complex data relationships that traditional models struggle to detect, thereby deeply mining the value of the data and supporting accurate predictions. The architecture of QNNs goes beyond traditional predictive models, capable of integrating dynamically changing and interrelated factors such as tactical strategies, player status, and match environment. Traditional models often struggle to process the complex interactions and dynamic changes between these factors in real-time. Whereas, QNNs can rapidly and flexibly adjust each factor’s weight allocation and correlation analysis by utilizing the unique properties of qubits. For example, when a tactical change occurs suddenly during a match, QNNs can instantly update their understanding of the relationship between tactics and player performance through quantum gate operations. This can quickly adapt to the new dynamics of the match. In the case of unexpected events, such as player injuries, QNNs can immediately reassess the overall team strength and match progress based on the rapid transitions of quantum states. Compared to the delays and limitations of traditional models when facing uncertainty, QNNs exhibit greater adaptability and flexibility, remarkably enhancing the accuracy and reliability of predictions22. While learning from vast historical data, QNNs maintain their adaptability to uncertainty. Leveraging the unique capabilities of quantum computing, QNNs can extensively explore the solution space in fewer training iterations, efficiently uncovering hidden patterns in historical match data. For instance, through DL of historical data, QNNs can accurately identify subtle patterns, such as the goal-scoring tendencies of specific player combinations in particular match situations. Additionally, when faced with uncertainties in football matches, such as player injuries or the introduction of new tactics, QNNs quickly adjust model parameters using the flexibility of quantum states, enabling them to immediately adapt to new match conditions. This is because the state of qubits can be rapidly reconfigured with new information, whereas traditional models, due to their fixed structures and parameters, often struggle to handle uncertainties, leading to increased prediction errors. QNNs effectively overcome this limitation, consistently maintaining high predictive performance23. The quantum neuron units (QNEs) and quantum weights in QNNs are crucial components that enable their powerful capabilities. QNEs perform complex transformations on input signals through quantum gate operations, converting features such as the current number of goals and historical match results into quantum state representations. This allows data to participate in the model computation in quantum form. Quantum weights, in turn, leverage quantum entanglement to enable weight sharing and dynamic adjustment, ensuring that the relationships between different features can influence one another within the quantum states. For example, when considering the relationship between the current number of goals and historical head-to-head records, quantum weights can dynamically adjust the contribution of these features to match outcome predictions. This adjustment is based on the degree of their entanglement in quantum states. This mechanism allows QNNs to capture non-linear interactions and quantum correlations that traditional linear models cannot detect, thus providing strong support for accurate football match outcome predictions. Compared to traditional ML or statistical models, QNNs demonstrate remarkable advantages in capturing hidden patterns and subtle non-linear relationships within football prediction data. Traditional models often rely on linear combinations or simple non-linear functions to process data, which struggle to uncover the underlying value in highly complex interrelationships within football match data. These models are also prone to getting trapped in local optima, limiting their ability to fully explore the data space. In contrast, QNNs, based on the superposition and entanglement properties of quantum states, overcome the limitations of traditional approaches, enabling a deeper exploration of more profound hidden patterns. For example, when analyzing the synergistic effects of a team’s offensive and defensive strategies, QNNs can capture how subtle changes in the attacking strategy influence defending players’ positioning and performance through quantum entanglement. Such complex and subtle relationships are often overlooked or difficult to accurately model in traditional models. QNNs can more comprehensively and accurately explore the data space, identifying those subtle yet critical non-linear relationships that traditional models miss, thereby providing more accurate, thorough, and in-depth information for football match prediction. This opens new pathways for the development of football match prediction, with the potential to drive significant advancements and breakthroughs in the field24.

A QNE is the basic component in a QNN that converts an input signal into an output signal and adjusts its parameters through training. The core concepts of QNE encompass quantum input, quantum weight, and quantum activation function. It can be assumed that the input vector is \(\:\overrightarrow{\varvec{x}}=({\varvec{x}}_{1},{\varvec{x}}_{2},\ldots,{\varvec{x}}_{\varvec{n}})\), the quantum weight vector is \(\:\overrightarrow{\varvec{w}}=({\varvec{w}}_{1},{\varvec{w}}_{2},\ldots,{\varvec{w}}_{\varvec{n}})\) and the quantum activation function is \(\:{\varvec{f}}_{\varvec{Q}}\)25. The output \(\:\varvec{y}\) of QNE can be calculated as:

$$\:\varvec{y}={\varvec{f}}_{\varvec{Q}}(\sum\:_{\varvec{i}=1}^{\varvec{n}}{\varvec{x}}_{\varvec{i}}\otimes\:{\varvec{w}}_{\varvec{i}})$$

(1)

⊗ represents the multiplication of some quantum state. During training, the error function can often be expressed as the difference between predicted and real outputs, such as the mean square error:

$$\:\varvec{E}=\frac{1}{2}\sum\:_{\varvec{j}}({\varvec{y}}_{\varvec{j}}-{\varvec{t}}_{\varvec{j}}{)}^{2}$$

(2)

\(\:{\varvec{y}}_{\varvec{j}}\) and \(\:{\varvec{t}}_{\varvec{j}}\) are the predicted and real output. The equation for parameter updating is similar to gradient descent in traditional neural networks:

$$\:{\varvec{w}}_{\varvec{i}}={\varvec{w}}_{\varvec{i}}-\varvec{\alpha\:}\frac{\partial\:\varvec{E}}{\partial\:{\varvec{w}}_{\varvec{i}}}$$

(3)

\(\:\varvec{\alpha\:}\) refers to the learning rate. Based on this, assuming that one wants to predict the outcome of a football match (win, draw, and loss), the following characteristics are selected as inputs:

(1)

Team A’s recent goals \(\:{\varvec{x}}_{1}\);

(2)

Team A’s recent goals conceded \(\:{\varvec{x}}_{2}\);

(3)

Team B’s recent goals \(\:{\varvec{x}}_{3}\);

(4)

Team B’s recent goals conceded \(\:{\varvec{x}}_{4}\);

(5)

The number of wins of Team A in the historical meetings between the two teams \(\:{\varvec{x}}_{5}\);

(6)

The number of draws for Team A in the historical meetings between the two teams \(\:{\varvec{x}}_{6}\);

(7)

The number of losses for Team A in the all-time meetings between the two teams \(\:{\varvec{x}}_{7}\).

Let the quantum weight vector be \(\:\overrightarrow{\varvec{w}}=({\varvec{w}}_{1},{\varvec{w}}_{2},\ldots,{\varvec{w}}_{7})\) and the quantum activation function be \(\:{\varvec{f}}_{\varvec{Q}}\). The output prediction result \(\:\varvec{y}\) can be calculated by Eq. (4):

$$\:\varvec{y}={\varvec{f}}_{\varvec{Q}}(\sum\:_{\varvec{i}=1}^{7}{\varvec{x}}_{\varvec{i}}\otimes\:{\varvec{w}}_{\varvec{i}})$$

(4)

⊗ denotes the multiplication operation of quantum states, and the specific implementation method may vary depending on the quantum computing framework and algorithm used. To train and optimize weights, an error function \(\:\varvec{E}\) is defined, such as using a cross-entropy loss function:

$$\:\varvec{E}=-\sum\:_{\varvec{k}\in\:\{\varvec{w}\varvec{i}\varvec{n},\varvec{d}\varvec{r}\varvec{a}\varvec{w},\varvec{l}\varvec{o}\varvec{s}\varvec{e}\}}{\varvec{t}}_{\varvec{k}}\mathbf{l}\mathbf{o}\mathbf{g}\left({\varvec{y}}_{\varvec{k}}\right)$$

(5)

\(\:{\varvec{t}}_{\varvec{k}}\) represents the one-hot encoding of the true outcome (win, draw, and loss); \(\:{\varvec{y}}_{\varvec{k}}\) refers to the corresponding probability predicted by the model26. The core of this football prediction concept of QNN is the comprehensive consideration of several key factors related to the match outcome. The team’s recent offensive and defensive performance, historical clash results, and other data are converted into input features, and complex interactive operations are carried out with quantum weights to capture the nonlinearity and potential quantum correlation between these factors27. The idea is more than simply linear combinations of data or traditional statistical analysis. The quantum states’ superposition and entanglement properties enable the model to explore hidden patterns and subtle relationships that are difficult to find with traditional methods28. For example, the variation trend of the number of goals scored and conceded by a team in a specific period may have a complex non-linear correlation with the match outcome, and QNN can effectively mine this correlation29,30,31.

Optimal design of QNN technology in football prediction

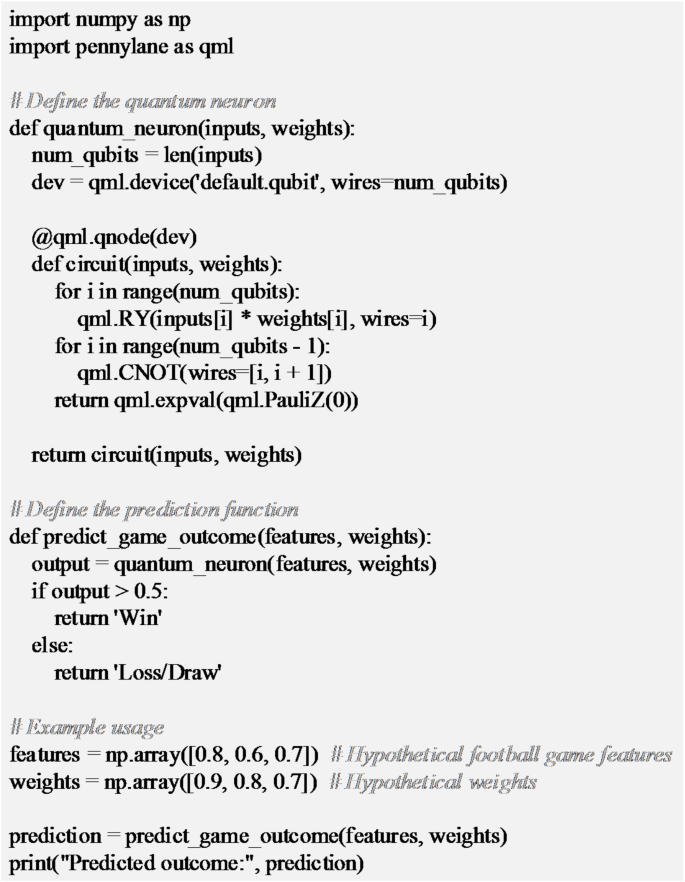

In quantum computing, optimizing qubits is crucial for enhancing system performance and computational efficiency. To achieve effective qubit optimization, the primary focus is on improving qubit quality32. This involves deeply exploring the physical implementation mechanisms of qubits, utilizing advanced materials and manufacturing processes to reduce environmental interference, thereby markedly extending their coherence time and stability. Additionally, carefully designing the qubit initialization process is essential. Customizing the selection of appropriate initial quantum state distributions based on specific computational tasks and data characteristics can lay a solid foundation for subsequent computational steps and accelerate algorithm convergence. At the quantum gate operation level, efforts are made to optimize their implementation by precisely controlling parameters and reducing operation time to minimize errors introduced by quantum gate operations. Moreover, designing innovative quantum algorithms that are highly compatible with specific qubit architectures can fully exploit the unique capabilities of qubits4. Introducing quantum error correction techniques is also a critical component; real-time monitoring and correction of errors during the computation process ensure the accuracy and reliability of quantum computing. Furthermore, cleverly integrating the strengths of classical computing to assist in the preprocessing and postprocessing stages of quantum computing can distinctly enhance overall computational efficiency without substantially increasing hardware costs. Lastly, from a hardware perspective, continuous improvements in the physical structure of quantum computing devices, optimizing the coupling strength and control precision between qubits, create favorable conditions for achieving more complex and precise quantum computing tasks33. Figure 2 illustrates the algorithm design for optimizing a two-layer neural network using qubits.

The algorithm design for optimizing a two-layer neural network using qubits.

In Fig. 2, the algorithm defines the QNE and the prediction function. It processes the characteristics and weights of the input through quantum operations. By simplifying the definition of quantum circuits with the Pennylane library, QNN technology is optimized to improve its performance. The Pennylane library plays a crucial role in constructing and optimizing quantum circuits within the QNN framework. It provides a set of predefined quantum gate operations and quantum circuit templates. Hence, it significantly simplifies the process of building quantum circuits, lowers the technical barriers for developers, and reduces the amount of code and the likelihood of errors. Through efficient algorithm optimization and resource management, Pennylane can intelligently adjust the sequence and parameters of quantum gate operations to minimize errors and resource consumption. Thus, it substantially improves the quantum circuits’ operational efficiency and computational accuracy. This, in turn, enhances the overall performance of the QNN, accelerates model training and prediction processes, and offers strong support for the development of quantum ML applications.

The concepts of quantum states, superposition, and entanglement in quantum mechanics offer a unique perspective for football match prediction. A quantum state can be viewed as an abstract description that encompasses multiple combinations of information simultaneously. In the context of football matches, this indicates that the multiple potential outcomes of a match can be considered together, overcoming the limitations of traditional prediction methods that presuppose a single deterministic result. The principle of superposition allows different match-related factors, such as team tactics and player conditions, to coexist in a superposed form within the system described by quantum states. These factors are only determined into a specific state when influenced by external observation or the actual progression of the match. For example, in pre-match analysis, a team’s offensive strategy may simultaneously encompass multiple potential choices. These choices are not mutually exclusive but exist in a superposition until specific scenarios during the match trigger the actual strategy to be executed. This approach provides a more comprehensive basis for analyzing a team’s potential actions and broadens the dimensionality of prediction information. Quantum entanglement describes non-local, strongly correlated interactions between different quantum systems. From the perspective of football matches, a team’s various components and influencing factors can be viewed as distinct quantum systems, such as individual player performance, team tactical cooperation, and the home environment atmosphere. These factors may exhibit entanglement, meaning that a change in one factor instantaneously affects other factors it is entangled with, even if they are physically independent. For example, the heightened enthusiasm of home supporters (home environment factor) may be entangled with the excitement and performance enhancement of home team players (individual player performance factor). This entanglement can have a combined effect on the match’s outcome. By considering this entanglement relationship during the prediction process, the model can more accurately capture the complex interactions between different factors, thus enhancing the accuracy and scientific basis of match outcome predictions. This offers a novel approach and framework for football match prediction, surpassing traditional analytical methods.

QNN demonstrates remarkable differences in architecture and performance compared to traditional neural networks, which provide it with practical advantages in football match prediction tasks. From an architectural perspective, traditional neural networks consist of classical neurons, with signals being transmitted and processed in the form of classical bits. Their network structure is typically based on a layered architecture, including input, hidden, and output layers, where information is transferred and transformed through weighted connections between neurons. In contrast, QNNs introduce qubits as the fundamental information processing units. Qubits possess unique quantum properties, such as the ability to exist in a superposition of 0 and 1. This allows a single quantum neuron in QNNs to simultaneously process multiple information pathways, significantly increasing the parallelism and efficiency of information processing. Additionally, the QNN architecture incorporates quantum gate operations, which are similar to the activation functions in traditional neural networks but feature quantum mechanical properties. These quantum gates can perform complex transformations and entanglement operations on qubits, enabling effective capture of complex relationships within the data. For example, when processing the numerous interrelated factors in a football match (such as player coordination, tactical compatibility, and player characteristics), quantum gate operations can exploit entanglement to uncover potential associations between these factors. However, traditional neural networks struggle to capture such highly complex and intertwined nonlinear relationships. In terms of performance, traditional neural networks often require a large amount of training data and extended training times to optimize weight parameters and achieve satisfactory prediction results. This is especially true when dealing with large-scale, high-dimensional data that involves complex nonlinear relationships. However, as data complexity increases, the performance improvement tends to plateau, and the model may become trapped in local optima, limiting prediction accuracy. In contrast, the QNN, with its qubit-based superposition and entanglement properties, can explore a broader solution space with relatively less training data and shorter training times. Hence, it can more effectively capture hidden patterns and relationships within the data. In football match prediction, the data is highly dynamic and uncertain. It contains many factors (such as sudden changes in player condition or unexpected events during the match) that are difficult to model accurately using traditional methods. Thanks to its powerful performance advantages, the QNN can more quickly and accurately adapt to these complex, variable data characteristics, significantly improving prediction accuracy and stability. For instance, when processing real-time match data updates, QNNs can adjust the prediction model more agilely, providing timelier and more precise forecasts of the match’s progress. Traditional neural networks may struggle to respond swiftly to rapid data changes, leading to an increase in prediction errors. In short, the differences in architecture and performance between QNN and traditional neural networks give QNN a clear advantage in football match prediction. Therefore, a more efficient and accurate solution can be offered to address complex football match outcome prediction problems.

Experimental design and experimental data

This study realizes the prediction of football match results by optimizing QNN technology, which can achieve the optimization of QNN technology and improve the prediction effect of football matches. Based on this, the model designed here is trained, tested, and evaluated. In this process, the designed mode is compared with other advanced models, to explore this model’s performance improvement effect. The comparison models selected in this study include the Convolutional Neural Network (CNN), Long-Short Term Memory (LSTM), (Back Propagation Neural Network (BPNN), Transformer, Convolutional Recurrent Neural Network (CRNN), and Connectionist Temporal Classification (CTC).

During the training process, this study utilizes four datasets. (1) The International Football Match Outcome (1872–2020) dataset, which covers 41,586 international football match outcomes from the first official match in 1872 to 2020. It includes various competitions from the Federation Internationale de Football Association (FIFA) World Cup and other international tournaments to regular friendly matches. (2) The Football League dataset, a vast collection spanning multiple seasons, numerous teams, and a large number of matches. For each match, it provides detailed information such as scores, goal scorers, possession rates, and shot attempts, as well as seasonal performance data for teams and individual statistics for players. This dataset contains hundreds of thousands of data points, offering ample material for in-depth analysis and precise prediction. (3) The Spanish Football League Match dataset, a substantial collection covering multiple seasons and including matches from various levels of leagues, such as La Liga and Segunda División, with thousands of matches involving numerous teams. Each match is rich in data, involving tactical arrangements, player performance, and referee decisions, providing robust support for research and forecasting. (4) The Football Match dataset, a comprehensive collection encompassing 9,074 matches from the top five European leagues across the 2011/2012 to 2016/2017 seasons, totaling 941,009 events. By integrating various data sources and performing reverse engineering on textual comments, it includes extensive match data covering over 90% of the matches. In processing these datasets, a series of rigorous steps are taken to ensure the quality and usability of the data, thereby enhancing the transparency of the study. Different methods are employed to address potential missing data issues in the datasets, based on the type and characteristics of the data. As an example of missing data from the International Football Match Outcome dataset, consider the case where weather conditions for a particular match are absent. If weather data for a match is missing, the first step is to compare the match with other games having similar characteristics, such as match time, location, and competition level. Then, the weather data from those matches are used to perform an initial imputation. If sufficient similar matches cannot be found for reference, external professional meteorological databases are utilized. Using historical weather data for the match location, combined with the approximate time range of the match day, the most likely weather conditions are inferred and used to fill in the missing data. In terms of feature engineering, various techniques are applied to extract the potential value from the data. For example, for the performance data of players, common indicators like goals and assists are considered, but the analysis is further refined by incorporating the player’s activity data on the field. The proportion of touches made by the player in high-intensity confrontation areas relative to the total touches is calculated to measure the player’s involvement and influence in critical areas. This is defined as the “key area touch rate.” For team data, in addition to analyzing regular statistics such as match results (win, loss, draw) and points, a “home advantage index” is constructed. This index takes into account factors such as the team’s goals scored, goals conceded, win rate, and audience attendance at home matches. Using a specific weighted algorithm, this index is derived to more accurately assess a team’s home-field advantage. Through these data imputation methods and feature engineering techniques, the quality of the dataset is optimized to the greatest extent. A solid data foundation is provided for the subsequent training of the football match outcome prediction model, thereby enhancing the overall reliability and scientific validity. Incorporating different datasets, such as the International Football Match Outcome and detailed European league data, markedly improves the predictive accuracy of the QNN model. The international match data covers teams’ styles, tactics, and player characteristics from different regions, enriching the model’s understanding of match outcomes under diverse football styles. European league data provides rich details, including long-term team performance, player injuries, and home-field advantage. Combining these datasets exposes the model to more scenarios and factor combinations, aiding in better learning of the complex relationships between match outcomes and various factors. Thus, the accuracy of predictions is enhanced for unknown match results, reducing biases and limitations resulting from a single data source.

Finally, this study uses detailed match records from 2008 to 2022 of major European football leagues, obtained from the Kaggle public dataset “European Football Database,” to train the model for predicting this year’s European Championship. However, several key issues, which have not been fully explored, arose during the research process. Since the data source relies solely on historical data from specific European football leagues, the limitations of this data may introduce potential biases. Differences between leagues in terms of playing style, team strength distribution, match rules, and competition environments may lead to patterns and features learned by the model. These patterns may not be fully applicable to predicting this year’s European Championship. For example, some leagues prioritize attacking play, while others emphasize defensive strategies. The European Championship, as a tournament that brings together national teams with diverse playing styles, may differ in characteristics from these specific leagues. If these potential biases are not adequately considered, the accuracy and reliability of the model’s predictions for the European Championship may be affected. Hence, future research should further analyze and address these potential issues arising from the data’s reliance on specific leagues and tournaments, to enhance the robustness and applicability of the model’s predictions.